Algorithms

SLAM (Simultaneous Localization and Mapping) uses a combination of sensors and computer vision techniques to perceive the environment and estimate the pose (position and orientation) of the robot in real-time. In our project, we used RTAB-Map with LiDAR data, PySLAM with visual data, and performed dead reckoning with IMU data to obtain the path.

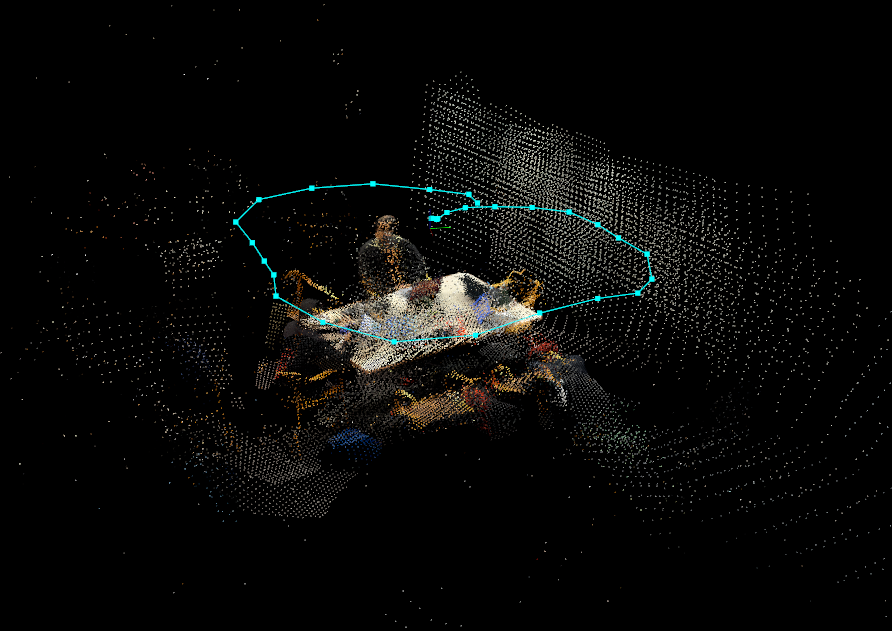

RTAB-Map

Real-Time Appearance-Based Mapping (RTAB-Map) is a LiDAR SLAM algorithm that can be used on mobile robots and autonomous vehicles to build a 2D or 3D map of the environment while simultaneously tracking its position and orientation. It has several advantages including real-time performance, loop closure detection, appearance-based mapping, and compact and lightweight design. RTAB-Map uses LiDAR features in the environment to create the map, rather than relying on pre-defined landmarks or markers, making it suitable for use in a wide range of environments. It can also detect when the robot or device has returned to a previously visited location, allowing it to correct and improve the map by correcting for drift in real-time, improving the accuracy of the map.

PySLAM 2

PySLAM is a Python library that provides tools for developing and testing Simultaneous Localization and Mapping (SLAM) algorithms. It is built on top of the OpenCV library and can use a camera or a set of stereo images as input. PySLAM supports various types of SLAM algorithms, including monocular, stereo, and RGB-D. It includes scripts for simple visual odometry (VO) and more advanced SLAM pipelines that include multiple frame feature tracking, point triangulation, keyframe management, and bundle adjustment. PySLAM has several advantages, including ease of use, modularity, compatibility with different platforms and environments, open source accessibility, and a supportive community. We used PySLAM in our project to gather data points in a video dataset and calculate the estimated path, 3D trajectory, and 2D trajectory of the dataset.

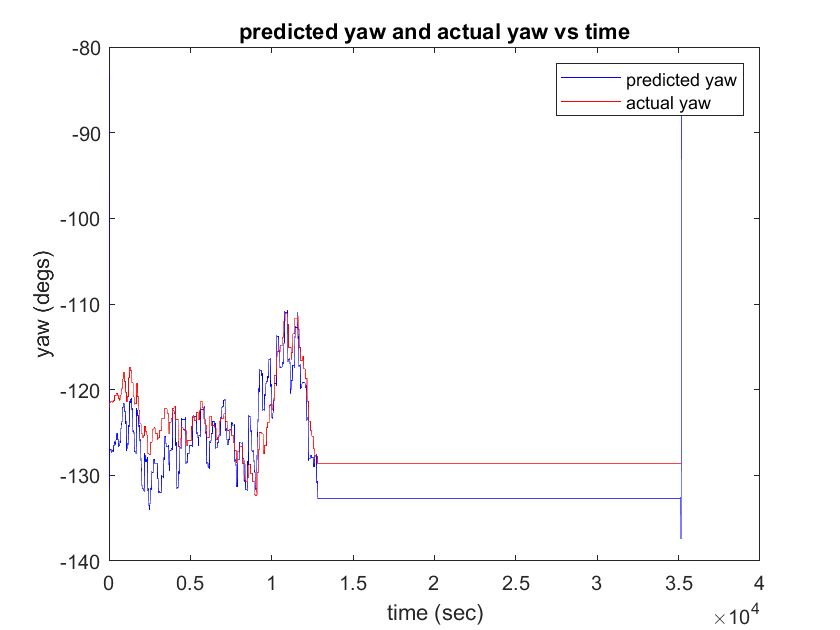

Dead Reckoning

Dead Reckoning is a navigation technique that uses a combination of sensors and algorithms to estimate the position and orientation of a mobile device or vehicle. It is often used in situations where GPS signals are unavailable or unreliable. In dead reckoning, the mobile device uses sensors such as an accelerometer, gyroscope, and magnetometer to measure the linear and angular motion of the device. These measurements are combined with a known initial position and orientation to estimate the current position and orientation of the device. We used dead reckoning as PySLAM and RTAB-Map depend on the environment and the features detected, which means that the path obtained is heavily dependent on external factors. However, dead reckoning uses a different approach by relying on raw sensor data to obtain the path.

.png)