Introduction

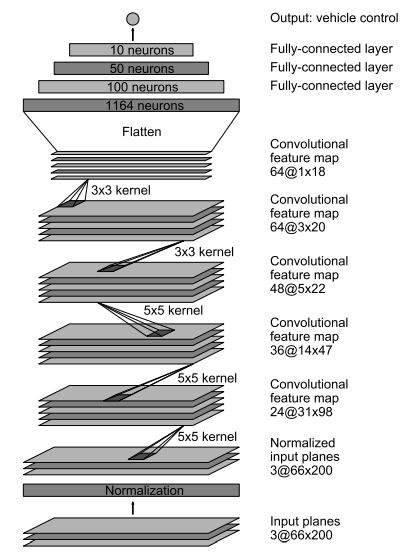

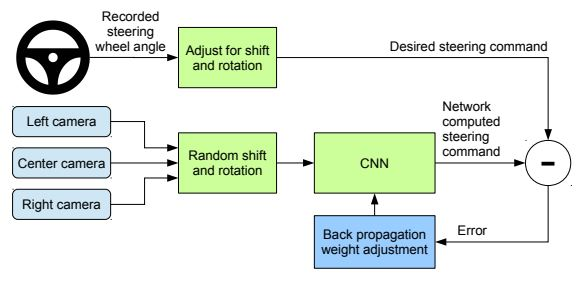

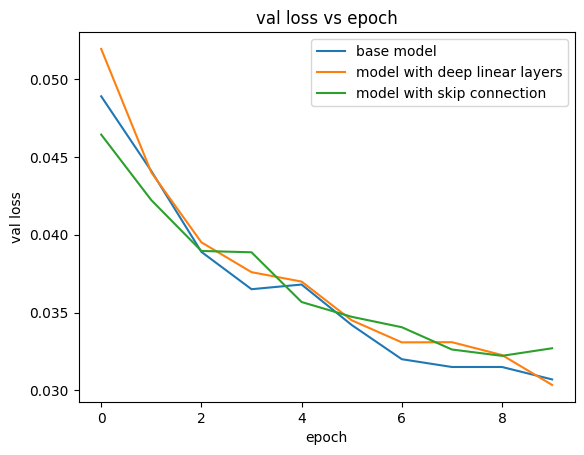

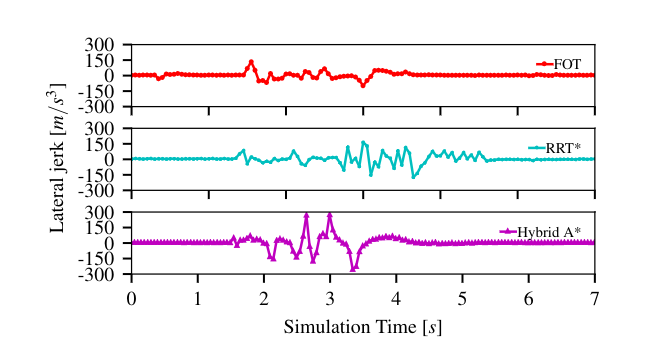

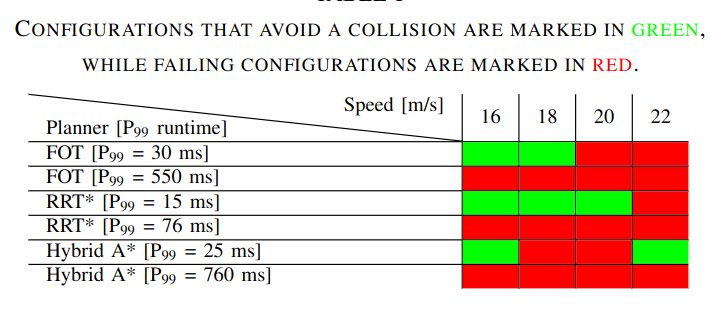

In this project, I aimed to develop a self-driving car using behavior cloning in the Unity simulator and a separate perception and planning stack in the CARLA simulator. I trained and tested the self-driving car using three NVIDIA models, each with convolutional layers, ELU activation layers, and fully connected layers. Additionally, I implemented a perception stack for object detection and traffic sign prediction, and a planning stack to navigate through traffic using hybrid RRT, A*, and Frenet optimal trajectory algorithms in the CARLA simulator.